Google’s Gemini 2.5 Just Learned to Browse Like a Human. Here’s the Catch

Google finally gave its AI actual web browsing skills. The new Gemini 2.5 Computer Use model can click buttons, fill forms, and navigate websites without human help.

Sounds impressive. But Google’s late to this party. Plus, their approach has some notable limitations compared to what competitors already offer. Let’s break down what this model can and can’t do.

How Computer Use Actually Works

Gemini 2.5 Computer Use analyzes your request and completes every step needed to fulfill it. It clicks, types, scrolls, and submits forms just like you would.

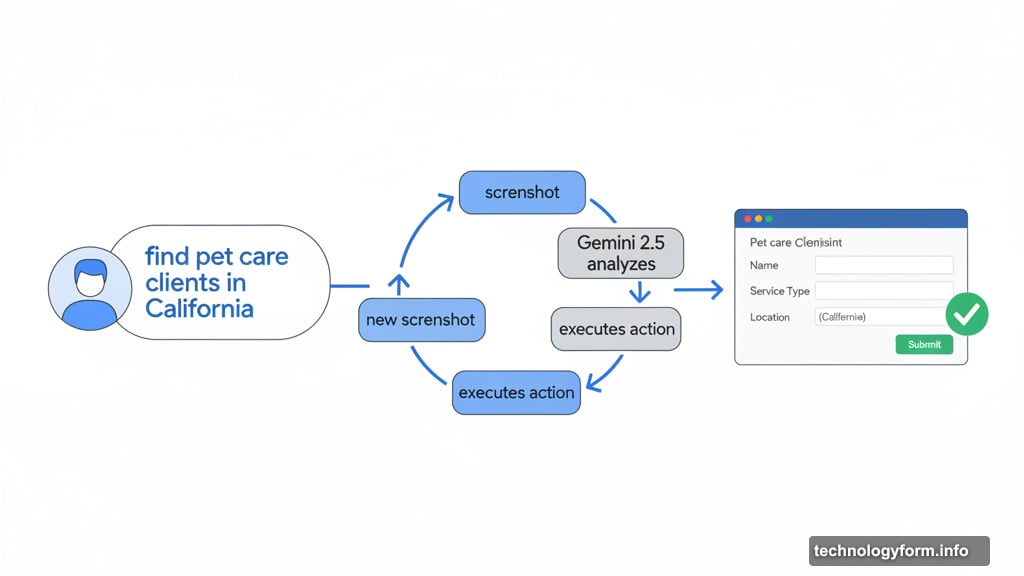

The process runs in a loop. First, you send a request along with screenshots of the website. Then, the model analyzes these inputs and decides what action to take next. Client-side code executes that action, captures a new screenshot, and sends it back to the model. This continues until the task completes.

For instance, you could ask it to find all pet care clients in California from one website, then add them to your spa CRM on another site. The model handles every step between those two points. It navigates both sites, extracts the right data, and inputs it where needed.

However, there’s a significant constraint. The model only works in web browsers. It can’t control your entire operating system like some competitors can.

Thirteen Actions and Nothing More

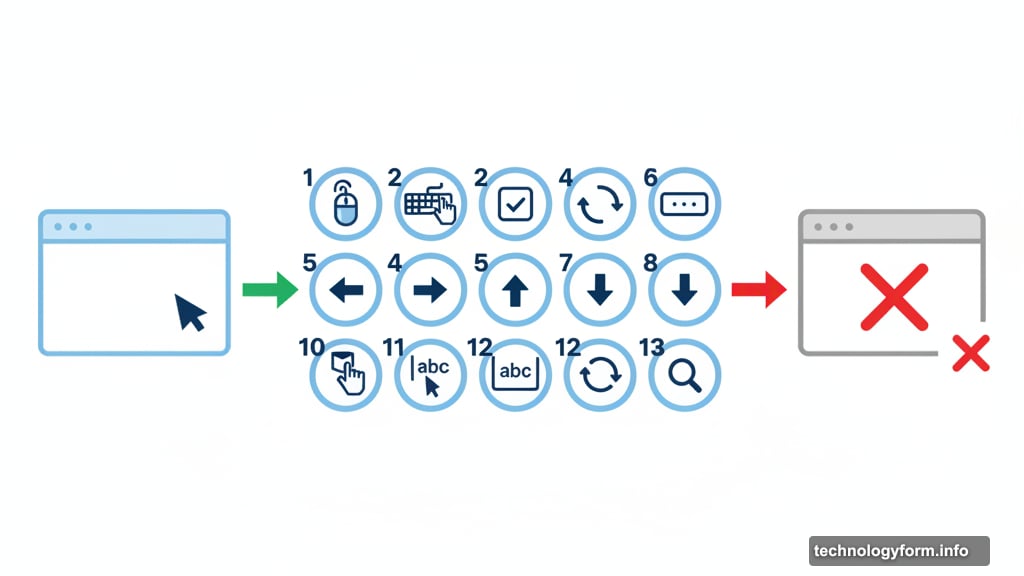

DeepMind currently supports just 13 actions. That includes basic operations like clicking, typing, and scrolling. But it’s not optimized for desktop OS-level control yet.

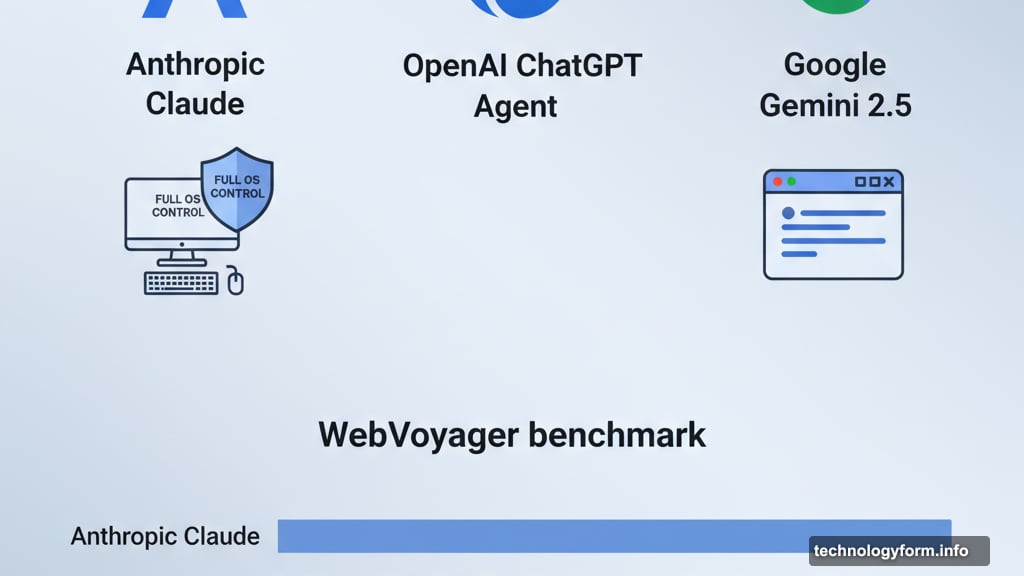

This matters because Anthropic’s Claude and OpenAI’s ChatGPT Agent can already access your full computer. They’re not limited to browser tasks. So while Google claims superior performance in web-specific benchmarks, the overall capability gap remains clear.

Moreover, Google’s demonstrations show the model working at three times normal speed. Real-world performance will feel slower. That’s important for workflows where speed matters.

Still, DeepMind’s researchers say their browser focus paid off. The model outperformed competitors on Online-Mind2Web and WebVoyager benchmarks. It even beat alternatives on AndroidWorld, which tests mobile UI control. So the specialized approach delivers measurable advantages in its target environment.

Late But Potentially Better

Google’s timing here is awkward. OpenAI just yesterday expanded ChatGPT Agent’s capabilities. Anthropic released their computer use feature last year. So Google’s announcement feels reactive rather than innovative.

But performance numbers tell an interesting story. Gemini 2.5 Computer Use achieves the lowest latency for browser control on the Browserbase harness. That means faster response times when you’re actually using it for web tasks.

Plus, the model builds on Gemini 2.5 Pro, which already powers features like AI Mode and Project Mariner. So Google has real-world testing data informing this release. It’s not purely theoretical.

The question becomes whether browser-only control suffices for most use cases. Many business workflows stay within web applications anyway. SaaS tools, CRMs, and project management systems all run in browsers. So the limitation might matter less than it initially seems.

Pricing Mirrors Gemini 2.5 Pro

Google’s pricing structure aligns closely with standard Gemini 2.5 Pro rates. Input tokens cost $1.25 per million for prompts under 200,000 tokens, rising to $2.50 per million for longer ones. Output tokens run $10 per million for shorter responses and $15 per million for extended outputs.

But here’s the catch. Standard Gemini 2.5 Pro offers a free tier with token limits. Computer Use doesn’t. You pay from the first request.

That matters for experimentation. Developers can test regular Gemini for free before committing. Computer Use requires upfront investment. So the barrier to entry sits higher, even though per-token costs match.

For production deployments, though, the pricing remains competitive. If you’re already using Gemini 2.5 Pro, adding Computer Use won’t drastically change your bill. The token-based structure means you pay for actual usage, not capability access.

Three Real-World Applications

Browser automation opens practical possibilities. First, data migration between systems becomes simpler. Instead of writing custom scripts, you describe what needs moving and where it goes. The model handles the tedious work of navigating interfaces and matching fields.

Second, research tasks speed up considerably. Ask the model to gather information from multiple sources, compare options, and compile results. It can check prices across retailers, read documentation on competing products, and summarize findings. That’s hours of manual work compressed into minutes.

Third, administrative workflows get easier. Need to schedule meetings across multiple calendars? Update customer records from various sources? Process routine form submissions? Computer Use handles repetitive browser tasks that consume staff time without adding value.

However, accuracy remains crucial. Always verify results for important tasks. AI models make mistakes, and wrong data in your systems causes real problems. So treat this as an assistant that speeds work, not a replacement that eliminates oversight.

Where This Goes Next

Google’s focusing on web browsers for now. But mobile and desktop OS control seem inevitable. The AndroidWorld benchmark results suggest they’re already testing mobile capabilities. Desktop would logically follow.

Moreover, the 13 supported actions will expand. Each new action type increases what the model can accomplish. So early limitations should ease over time as DeepMind adds functionality.

Yet competition matters here. Anthropic and OpenAI aren’t standing still. They’ll keep improving their computer use features. So Google needs to move faster than they have historically to catch up and surpass alternatives.

The real question is integration. Will Computer Use work seamlessly with other Google services? Can it access your Drive files, read your Calendar, and use your saved passwords? Those connections would make it far more powerful than isolated browser control.

Right now, this feels like a solid first step that arrived late. The technology works. The performance numbers look good. But the vision remains incomplete compared to what competitors already shipped.

Developers can access Gemini 2.5 Computer Use through Google AI Studio and Vertex AI starting now. Whether that access justifies the cost depends entirely on your specific workflows and how much browser automation actually helps your business.