AMD Stock Explodes 35% as OpenAI Seals Massive Chip Partnership

AMD just became OpenAI’s newest infrastructure partner. Markets noticed immediately.

Shares jumped over 35% in premarket trading Monday after the companies announced a deal that could hand OpenAI a 10% stake in the chipmaker. This isn’t just another supply agreement. It’s a billion-dollar bet that reshapes the AI hardware landscape.

The Deal That Shocked Silicon Valley

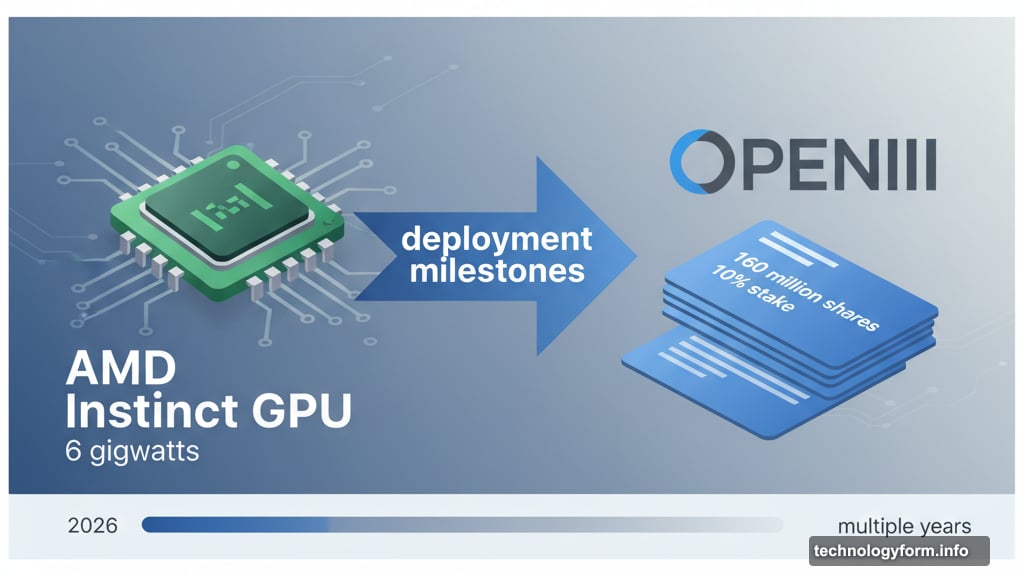

OpenAI committed to deploying 6 gigawatts of AMD Instinct GPUs over multiple years. That’s an enormous amount of computing power.

The rollout starts in late 2026 with 1 gigawatt of chips. Then it scales from there across multiple hardware generations. Each gigawatt represents thousands of high-performance processors working in tandem.

AMD sweetened the arrangement with a warrant for up to 160 million shares. OpenAI can exercise those shares as it hits deployment milestones. First tranche unlocks with the initial gigawatt. Additional shares vest as OpenAI scales toward the full 6-gigawatt target.

If OpenAI exercises the complete warrant, it acquires roughly 10% of AMD. Based on current share counts, that’s a substantial ownership position. The companies called it worth “billions” but refused to specify an exact figure.

Why This Matters More Than You Think

AMD has chased Nvidia in AI chips for years. Now it finally landed a flagship customer building cutting-edge AI systems.

For OpenAI, the partnership solves a critical problem. Relying on a single chip supplier creates risk. Supply chain disruptions can halt entire projects. Plus, diversification gives OpenAI more negotiating power on pricing and delivery schedules.

The timing seems deliberate. OpenAI signed a $100 billion deal with Nvidia just two weeks ago. That agreement combined equity investment with long-term chip supply. Now AMD enters the picture with similar terms but reversed ownership structure.

Nvidia took a stake in OpenAI. Meanwhile, OpenAI takes a stake in AMD. Different approaches to the same goal of securing critical hardware.

The Trillion-Dollar Infrastructure Race

OpenAI isn’t just buying chips. It’s building physical data centers at unprecedented scale.

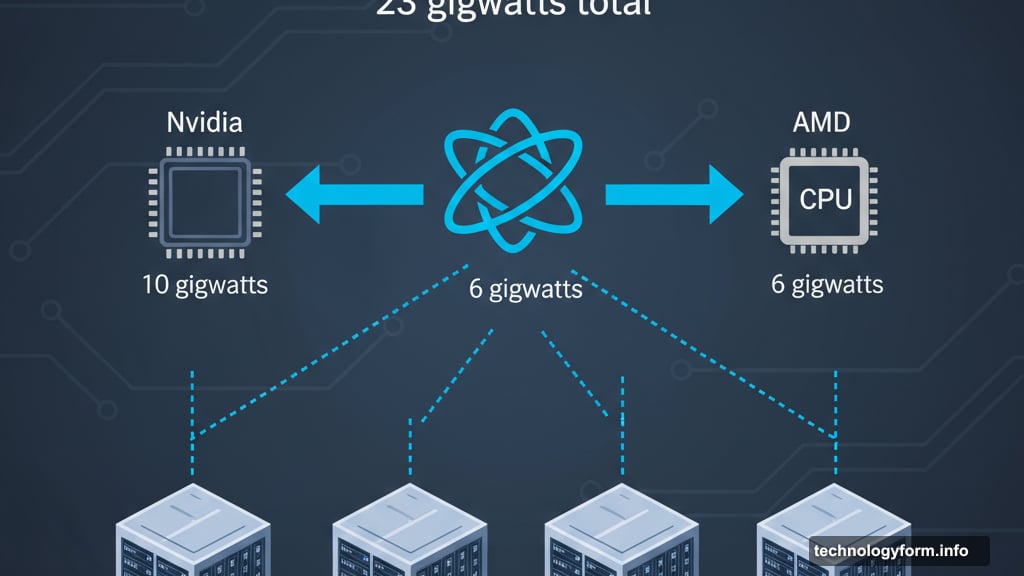

The company’s Stargate project aims for 23 gigawatts of total infrastructure capacity. At roughly $50 billion construction cost per gigawatt, that’s over $1 trillion in spending. The Nvidia and AMD deals together account for 16 gigawatts of that total.

OpenAI’s first site in Abilene, Texas already runs Nvidia chips. Additional facilities planned for New Mexico, Ohio and the Midwest will feature mixed hardware including AMD processors. This multi-vendor strategy spreads risk across suppliers.

But here’s what worries analysts. The AI industry increasingly resembles a circular economy where the same companies fund each other. Nvidia supplies capital to buy its own chips. Oracle builds the sites. AMD and Broadcom provide hardware. OpenAI anchors demand.

If any link weakens, the whole chain could face strain. That’s particularly concerning given the massive capital commitments involved.

AMD Finally Gets Its Moment

Lisa Su, AMD’s CEO, called it “a true win-win enabling the world’s most ambitious AI buildout.” She’s not wrong.

AMD spent years developing its Instinct GPU lineup to compete with Nvidia’s dominant data center chips. Technical specs looked promising. But landing major AI customers proved difficult when Nvidia had such strong momentum.

This OpenAI partnership validates AMD’s roadmap. It proves the company can deliver chips that meet demanding AI workload requirements. Plus, it establishes AMD as a serious alternative for companies building large-scale AI infrastructure.

Markets responded enthusiastically to that validation. The 35% stock price jump reflects investor belief that AMD can finally capture meaningful share in the booming AI accelerator market.

What About Nvidia?

Nvidia shares dipped 1% in premarket trading on the news. That’s surprisingly mild considering a major customer just diversified away from exclusive reliance on Nvidia chips.

Perhaps investors recognize that OpenAI’s Nvidia deal remains massive at 10 gigawatts. Or maybe they understand that total AI infrastructure spending is growing so fast that multiple suppliers can thrive simultaneously.

Still, Nvidia must notice the trend. OpenAI also reportedly talks with Broadcom about custom chips for next-generation models. That’s three hardware partners beyond Nvidia getting pieces of OpenAI’s spending.

Competition in AI chips is intensifying. Nvidia still dominates. But its dominance looks slightly less absolute than it did a month ago.

The Real Infrastructure Challenge

Building AI data centers at this scale faces practical limits beyond chip supply.

Power grids struggle to deliver 23 gigawatts to single customers. Water cooling systems for dense GPU clusters require massive infrastructure. Construction timelines stretch years even with unlimited budgets.

OpenAI’s aggressive expansion timeline assumes these obstacles get solved. That’s far from guaranteed. Regulatory approvals, grid capacity upgrades and specialized construction skills all create potential bottlenecks.

The company clearly believes throwing money at the problem will work. Over $1 trillion in committed spending suggests serious confidence. But infrastructure buildouts of this magnitude often face unexpected delays regardless of budget.

A Circular Economy That Feels Fragile

The structure of these deals creates interesting dependencies. OpenAI needs chips from AMD and Nvidia. Those chipmakers need massive orders to justify continued R&D spending. Cloud providers need AI workloads to fill their data centers. AI companies need cloud infrastructure to train models.

Everyone depends on everyone else. That works beautifully when the AI boom continues expanding. But it creates systemic risk if growth slows or if any major player stumbles.

Investment flows in circles too. Companies take stakes in each other. They provide capital and buy products simultaneously. Traditional boundaries between customer, supplier and investor blur together.

Financial analysts should watch this circular structure carefully. It might represent efficient capital allocation in a fast-moving market. Or it might indicate an overheated sector where normal business logic gets suspended.

What Comes Next

OpenAI’s hardware strategy now spans multiple partners with billions in committed spending. AMD gained validation as a serious AI chip supplier. Nvidia faces actual competition in a market it previously dominated.

The 2026 timeline for initial AMD chip deployment gives both companies time to refine integration and optimize performance. Meanwhile, construction continues on physical sites that will house the hardware.

Markets clearly love AMD’s position in this deal. The stock price surge reflects genuine optimism about the company’s AI prospects. Whether that optimism proves justified depends on execution over coming years.

One thing seems certain though. The AI infrastructure race just got significantly more complex and expensive. Buckle up.