Suno’s New AI Music Generator Sounds Perfect. That’s the Problem

Suno just launched version 5 of its AI music generator. The audio quality jumped significantly. Mixes sound cleaner. Instruments separate better. Technical specs improved across the board.

But something’s still missing. Every track feels hollow. The vocals hit every note perfectly, yet convey zero emotion. It’s like listening to a talented singer who doesn’t understand the lyrics they’re performing.

Better Audio Quality Can’t Hide the Emptiness

Suno v5 fixed many technical problems from earlier versions. Previous models would smush instruments together into muddy mixes. Bass, guitar, and synth parts bled into each other.

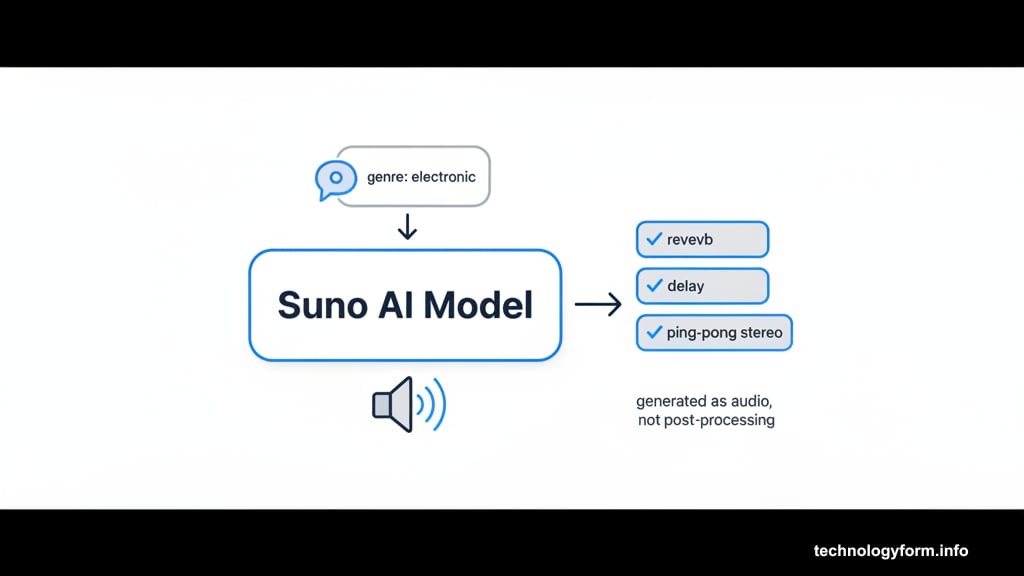

Not anymore. The new model delivers clean separation between elements. During testing, one track included a flute-like synth with what sounded like ping-pong delay. The model understood this was an isolated sound that needed specific treatment in the stereo field.

Here’s the fascinating part. Suno doesn’t actually apply audio effects. Instead, the AI decides what effects should exist based on the genre and style. Then it approximates those effects in the output. So when you hear reverb or delay, the model generated that as part of the audio, not as a post-processing step.

The technical achievement is impressive. But technical prowess doesn’t equal artistic merit.

Genre Understanding Remains Questionable

Suno claims v5 better understands musical genres. My testing suggests otherwise.

I asked for “modern avant R&B with glitchy, but funky drums, atmospheric melodic parts, and breathy vocals.” Both v5 and the previous version got close with downtempo tracks and moody synths. But neither captured the weirdness I wanted. Think Kelela’s experimental production, not generic streaming playlist fodder.

Then I tried “early ’90s lo-fi indie rock recorded on a 4-track cassette recorder with off key vocals and slightly out of tune guitars.” The results were hilariously wrong. Instead of Pavement’s slack noise pop, Suno delivered bombastic tracks with chunky riffs and clean power chords. More Arctic Monkeys than anything released before 2000.

Similarly, asking for “late 1970s krautrock” produced mixed results. Version 4.5+ basically nailed the vibe minus vocals. But v5 often delivered ’80s synthpop or distinctly modern-sounding tracks, even when they had some krautrock DNA.

The model struggles with era-specific requests. It knows broad genres but misses the subtle details that define particular movements or time periods.

More Complex Doesn’t Mean More Interesting

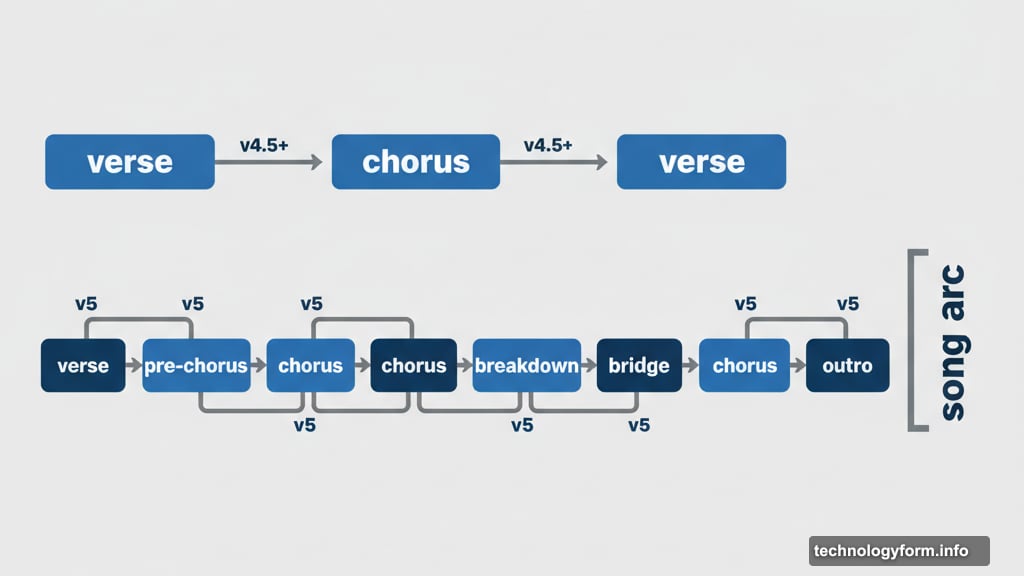

One genuine improvement: v5 creates more complex arrangements. Compared to v4.5+, songs include more musical flourishes and varied structures.

Earlier versions stuck with basic verse-chorus-verse patterns. Maybe a bridge if you were lucky. But v5 adds pre-chorus sections, multiple bridges, breakdowns, and actual song arcs. Tracks build over time rather than just jumping between distinct sections.

I tested the remix feature by uploading a song from my old EP. The results were technically interesting. Suno transcribed parts of my guitar solo into a recurring synth motif. It turned my chord pads into driving arpeggios.

But it stripped out everything that made the original special. My track was raw and lo-fi, recorded in my living room at 3AM six years ago. Suno’s version sounded polished and sterile. The imperfections that gave the song character vanished completely.

That’s the running theme. Suno can mimic superficial features like tape hiss or breath sounds. Yet it always feels fake. The model copies the surface but misses the essence.

Perfect Vocals Sound Inhuman

Suno initially promoted v5’s “emotionally rich vocals” and “human-like emotional depth.” That language quietly disappeared from marketing materials. Now they call the vocals “natural, authentic.”

Even that’s a stretch. Yes, vocals sound more human than v4.5+. But they’re still stiff. Every performance feels generic and emotionless.

Here’s the problem: there are no edges to any Suno vocals. Everything gets bathed in reverb, layered with harmonies, and pitched perfectly. Even when you explicitly tell the model not to do these things, it ignores you.

I asked for an “unprocessed emotional solo a cappella female vocal performance with no reverb, no harmonies, no effects, just dry vocals.” The two songs came back drenched in reverb with multiple harmony vocalists. One even had what sounded like a bass accompaniment.

According to Suno product manager Henry Phipps, the models don’t yet understand descriptions of specific effects and recording techniques. Vocal performance gets influenced by lyrics and general mood. But it can’t deliver the raw, unprocessed sound that makes certain performances powerful.

The Emotional Connection Doesn’t Exist

I fed Suno lyrics similar to the Rolling Stones’ “Gimme Shelter.” The output had all the surface elements. Powerful female vocalist. Full, bluesy arrangement. Shouting over distorted guitars.

But zero emotional impact. When I listen to the original “Gimme Shelter,” Mary Clayton’s voice cracking on “rape and murder” makes me choke up. That raw break in her vocal conveys genuine pain and fear.

Suno can’t replicate that. It knows the lyric should sound sad. But it has no actual emotional connection to the words. Because it’s code, not an artist.

The imperfections often carry the emotional weight in music. Robert Smith’s completely out-of-tune warble conveys desperation in The Cure’s “Why Can’t I Be You.” The tangible exhaustion in Kurt Cobain’s breath right before the last line in “Where Did You Sleep Last Night” tells you this man was struggling with real demons.

Trying to make Suno sound “bad” proved futile. Out of tune. Raw. Off key. Sloppy. The model fights against all of it. For all the talk about “natural” vocals, it lacks the imperfections that make performances memorable.

Technical Excellence Without Soul

Version 5 represents clear technical progress. Audio quality improved. Arrangements got more complex. Some mixing issues got fixed.

But Suno still can’t create music that moves people. Every track sounds competent yet hollow. The vocals hit every note while missing every emotion.

Maybe that’s the fundamental limitation of AI-generated art. These models can analyze patterns and reproduce technical elements. They understand that certain lyrics should sound sad or angry or joyful. But understanding isn’t the same as feeling.

Music works when artists pour genuine emotion into their performances. The cracks in voices. The slightly rushed beats. The raw takes that capture something real. Those “imperfections” separate art from technical exercise.

Suno version 5 is technically impressive. But impressive technical specs don’t create meaningful art. Until AI can truly feel something beyond pattern recognition, generated music will remain soulless, no matter how clean the mix sounds.